This looked at the development and use of a debriefing tool based on Safety-II concepts. I don’t have a lot to say about this one as it’s open access and it was mostly just briefly covering the initial piloting of the tool.

As some background, the authors note their inspiration was to develop a tool to help people operationalise a focus on learning not just from bad events but from all events, routine and mundane, and on normal work.

They argue that while debriefing is not new or uncommon, “many common debriefing strategies are more focused on Safety-I” (p1), e.g. more focused on learning from what went wrong and not enough focus on normal work and how work occurs due to capacities, adjustments, variation and adaptation in complex systems.

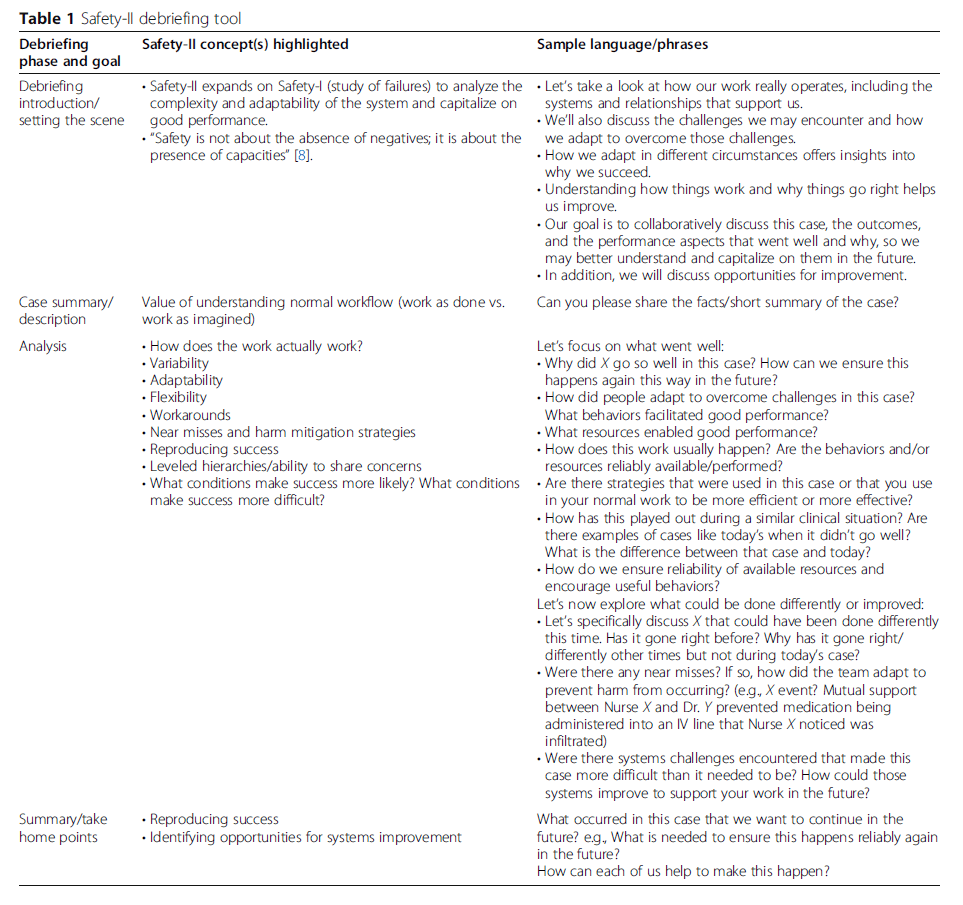

You may argue with the logic, underlying research or methodology but the example questions they ask in the tool may be of interest to my network who are starting out in the S-II space.

[That is, even if you disagree with S-II/new view etc., the sample questions are probably worth asking based on any ideology.]

Regarding the tool, it was developed iteratively by simulation experts and tested with 2 pilot groups, who provided feedback. Likert-scale assessments were also undertaken on user feedback for the overall impression of the tool, readability and anticipated use of the tool.

Results:

Based on limited feedback of the tool during piloting (n = 10), there was 100% feedback for strongly agree or agree to questions on utility and useability of the tool. People noted that the tool “added much value to depth of [their] debriefing” or use of the tool “will be so useful to probe deeper during a ‘smooth’ case” (p4). In another instance, feedback indicated that a user will “definitely use this again, it helped me expand on the ‘why did it go right’ question I always try to ask” and in another case a user says that this type of tool will help them not to fumble through learning about what goes right (p4).

Several instances were noted of how the question set helped frame discussions about the full spectrum of work; noted how it helped to actually give them the “language” to comment on the concept that they saw happen during work. In one example, it was noted that it seems to be difficult for people to discuss things that went well because they are perhaps so primed to seek out the error or what went wrong”, whereas use of tools and concepts like these may also lead to more people drawing on and using the ideas.

Observed was that use of the tool increased both the number of types and topics discussed during debrief meetings. In this case, the topics increased from 14 topics to 21 pre and post tool use. In one example, use of the tool led to discussions around gaps between work-as-imagined versus work-as-done with how hospital paging systems function.

For those interested, you can find the question-set/tool in the open access paper or extract below.

Study authors: Suzanne K. Bentley, Shannon McNamara, Michael Meguerdichian, Katie Walker, Mary Patterson, Komal Bajaj, 2021, Advances in Simulation, 6, 9

Study link: https://doi.org/10.1186/s41077-021-00163-3

Link to the LinkedIn article: https://www.linkedin.com/feed/update/urn:li:ugcPost:6932452713506975745?updateEntityUrn=urn%3Ali%3Afs_updateV2%3A%28urn%3Ali%3AugcPost%3A6932452713506975745%2CFEED_DETAIL%2CEMPTY%2CDEFAULT%2Cfalse%29