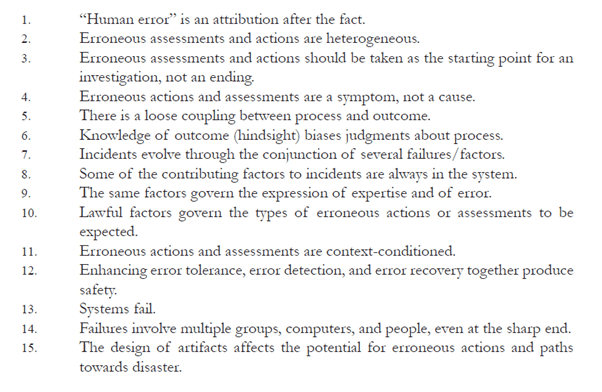

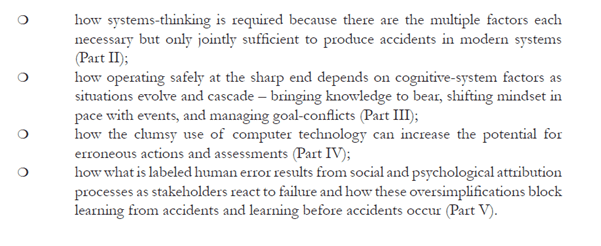

The below images are really just an index from the book “Behind Human Error” – but nicely summarises some key concepts.

The meaning of most items are obvious but I’ll explain some of the less obvious statements.

#2 about erroneous assessments/actions being heterogenous indicates that performance is contextual, so grouping everything under a neat label of “human error” makes little empirical sense.

#9 relates to how error can’t be separated from “normal human behavior in real work situations”; “Knowledge and error flow from the same mental sources, only success can tell one from the other” (p25) (i.e. hindsight).

Further, to understand “error” in real-world situations requires the study of individuals as embedded in a larger system that provides resources and constraints – that is, it’s a system emergent property rather than (just) situated in the head of the individual.

#10 indicates that “lawful factors” govern performance. Regarding people, “Errors are not some mysterious product of the fallibility or unpredictability of people; rather errors are regular and predictable consequences of a variety of factors” (p25).

In many cases we have really good situated and theoretical knowledge of what enforces or constrains human performance (where something is/is not more likely to occur; some call it error traps but it goes more broadly than that). Knowing *exactly when* is more challenging, but not particularly critical anyway, when knowing the former conditions.

Quite relevant for my own research are items #5 and #15 – as in the connection between processes/ written artefacts and performance. Upstream processes don’t necessarily directly, predictably or accurately translate to downstream action or results.

As we argued in our theory paper and have shown in later papers (yet to be published), not only are examples replete of loose coupling between process and outcomes (such as routines), but in some cases virtually no connection at all between process and the behaviour of systems and people.

This is really problematic when you consider how often tweaking a procedure, conducting a risk assessment or undertaking an audit are used post serious event.

Nevertheless, writing a plan, tweaking a process (like confined space entry), or creating a risk assessment is very cathartic.

It feels like we’ve done something substantive, all the while not really addressing the underlying issue we intended to.

Source of images: Woods, D. D., Dekker, S., Cook, R., Johannesen, L., & Sarter, N. (2010). Behind human error. Ashgate.

Link to the LinkedIn article:

2 thoughts on “System factors “behind human error””