A fascinating read from Carl Macrae (his work is always good – I’ve covered a few of his papers), exploring the sociotechnical sources of risk of autonomous and intelligent systems (AIS).

Reanalysis of the 2018 fatal Uber self-driving car crash via multiple reports was used as a case.

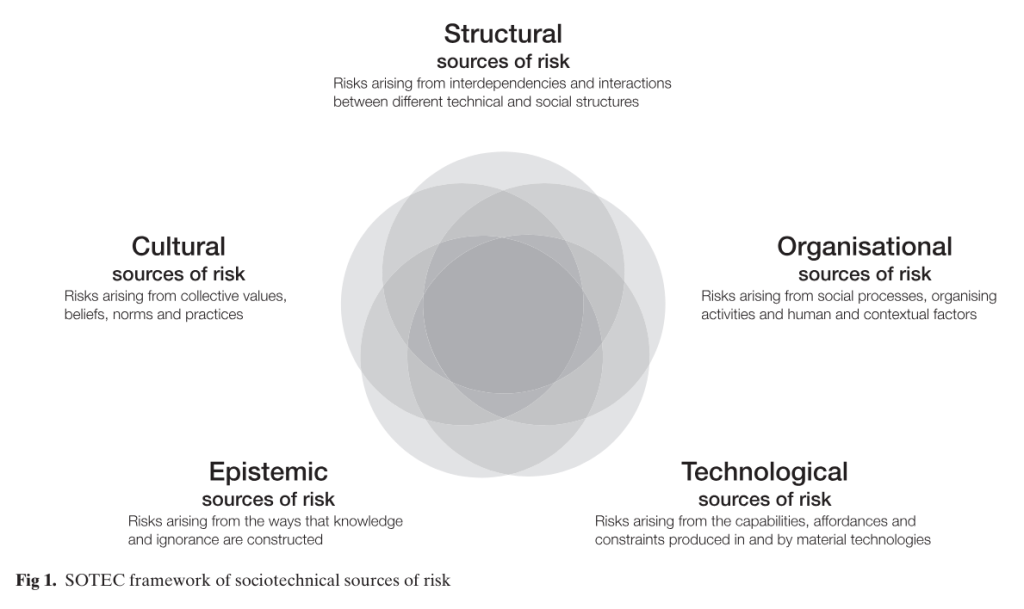

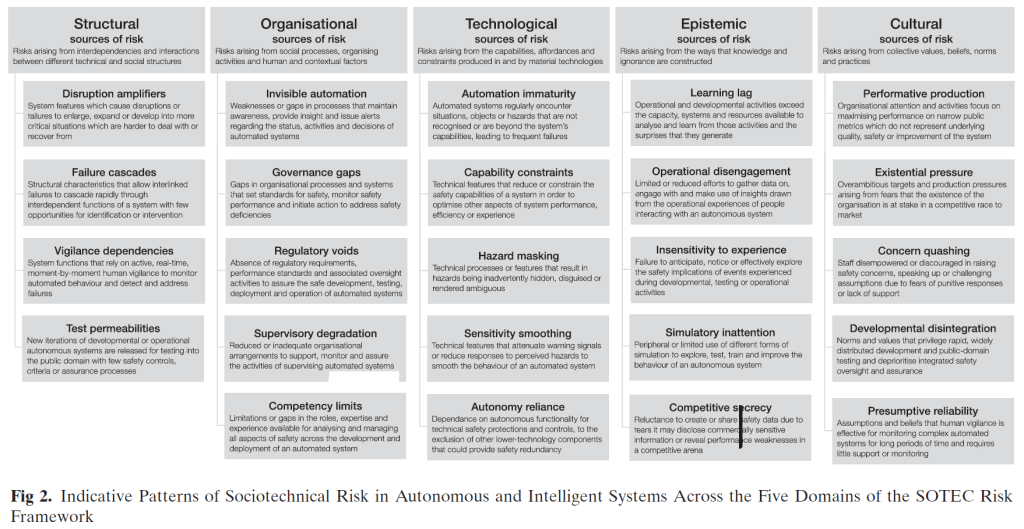

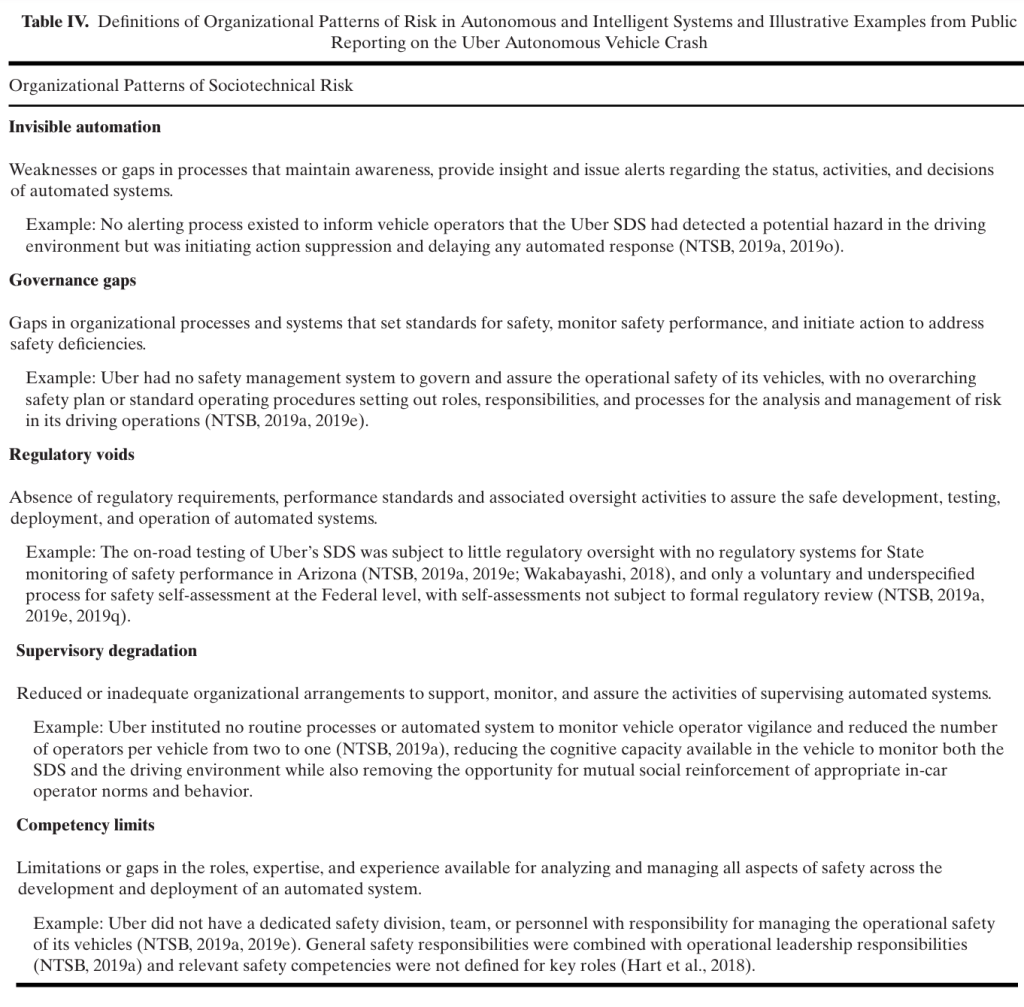

Five sources of sociotechnical risk were conceptualised:

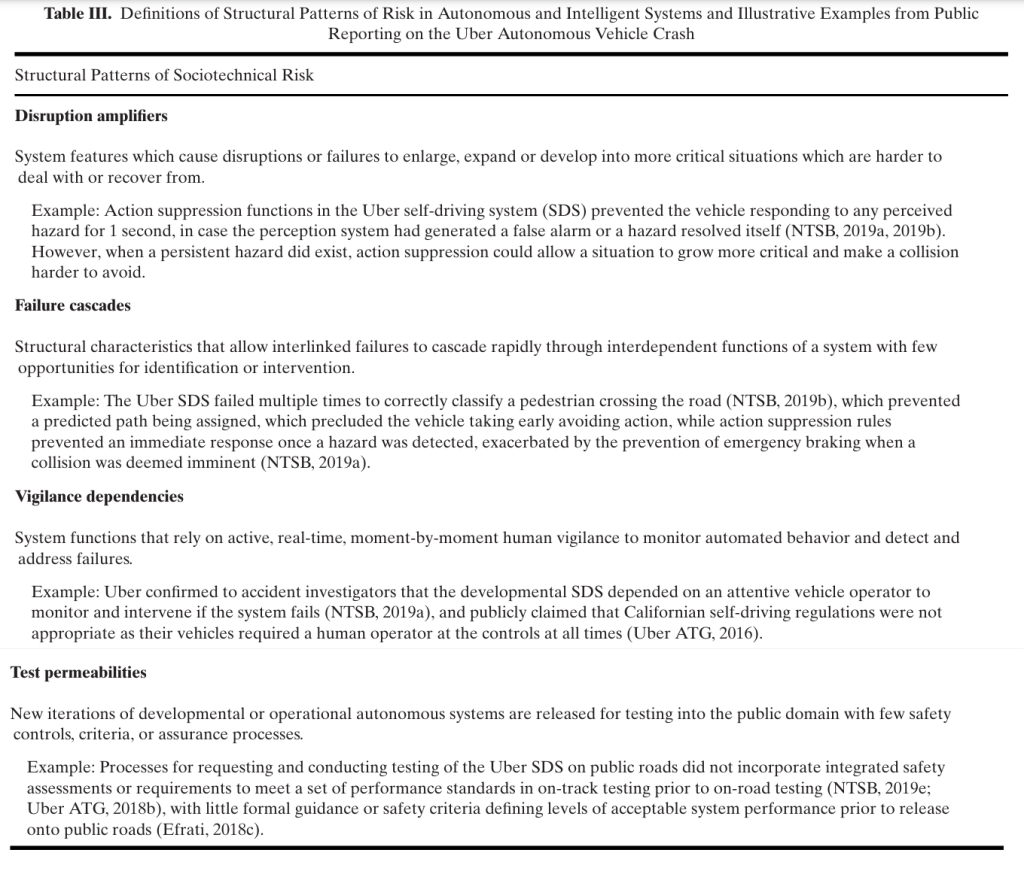

· Structural

· Organisational

· Technological

· Epistemic

· Cultural

Some of the risks I found particularly interesting were:

· Vigilance dependencies: system functions requiring active, real-time human vigilance to monitor automated behaviour and address failures

· Invisible automation: gaps in processes that maintain awareness, provide insight and issue alerts in the status and decisions of automated systems

· Hazard masking: technical processes that result in hazards being inadvertently hidden, disguised or ambiguous

· Sensitivity smoothing: Technological features that attenuate warning signals to smooth the behaviour of automated systems

· Autonomy reliance: dependence on autonomous functionality for technical safety protections when lower-technology could provide safety redundancy

· Competitive secrecy: Reluctance to share safety data due to fears it may disclose commercially sensitive information

· Existential pressure: Overambitious targets and production pressures

· Presumptive reliability: Assumptions that human vigilance is effective for monitoring complex automated systems for long periods

Check out the full paper – you won’t be disappointed.

Ref: Macrae, C. (2022). Learning from the failure of autonomous and intelligent systems: Accidents, safety, and sociotechnical sources of risk. Risk analysis, 42(9), 1999-2025.

Study link: https://onlinelibrary.wiley.com/doi/pdfdirect/10.1111/risa.13850

My site with more reviews: https://safety177496371.wordpress.com

One thought on “Learning from the failure of autonomous and intelligent systems: Accidents, safety, and sociotechnical sources of risk”