Not another CrowdStrike post – but this reminded me of some comments from Nancy Leveson about the interactively complex and sometimes “impossible” task of thoroughly understanding software failure modes.

Some cherrypicked examples from several of Nancy’s sources:

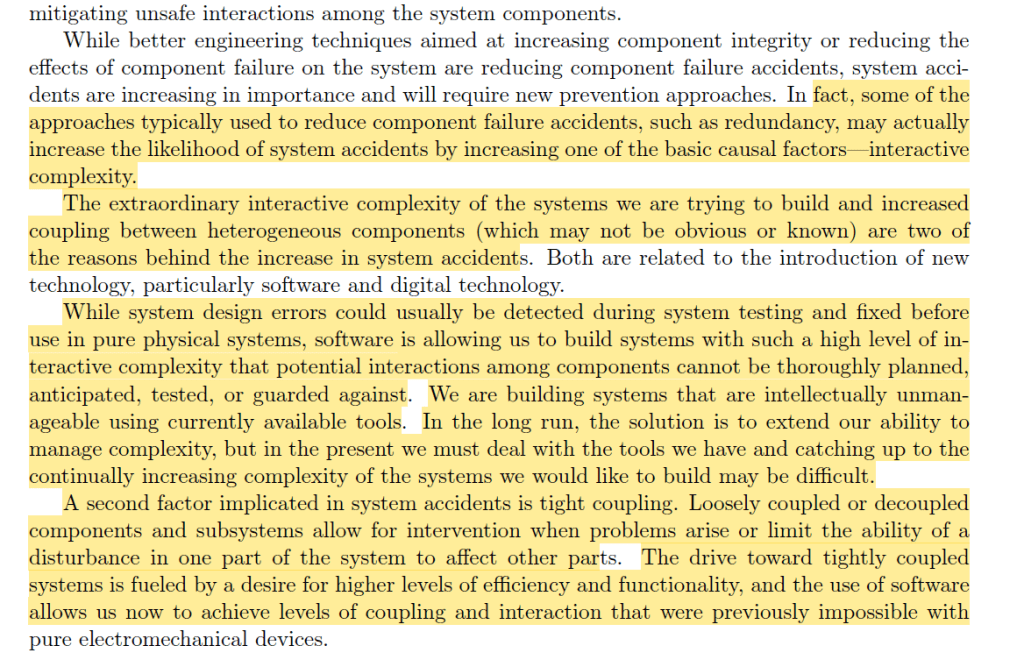

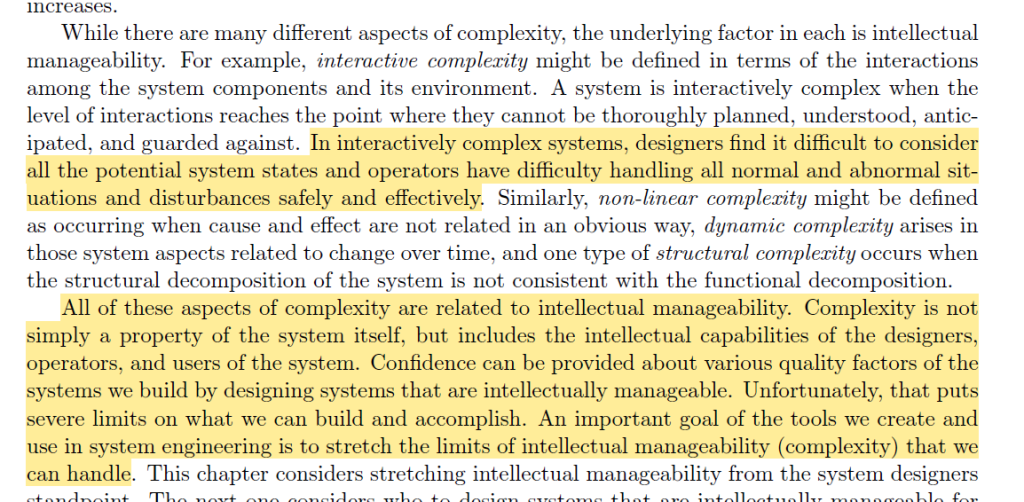

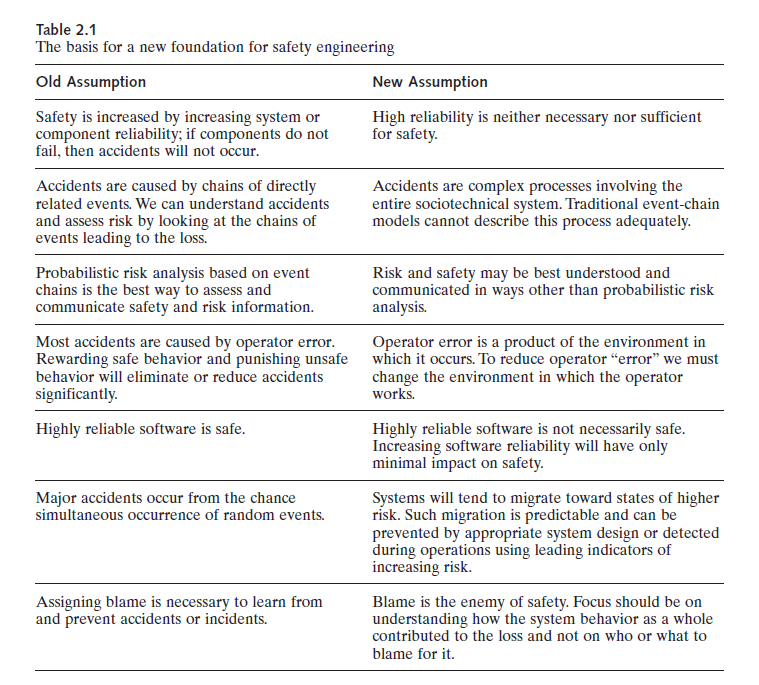

· “Complexity has many facets, most of which are increasing in the systems we are building, particularly interactive complexity”

· “We are designing systems with potential interactions among the components that cannot be thoroughly planned, understood, anticipated, or guarded against”

· “The operation of some systems is so complex that it defies the understanding of all but a few experts, and sometimes even they have incomplete information about its potential behavior.”

· “Software is an important factor here—it has allowed us to implement more integrated, multi-loop control in systems containing large numbers of dynamically interacting components where tight coupling allows disruptions or dysfunctional interactions in one part of the system to have far-ranging rippling effects”

· “The problem is that we are attempting to build systems that are beyond our ability to intellectually manage: Increased interactive complexity and coupling make it difficult for the designers to consider all the potential system states or for operators to handle all normal and abnormal situations and disturbances safely and effectively”

· Use of software testing and simulation can never be comprehensive or complete in complex software systems, moreover “simulation can show only that we have handled the things we thought of, not the ones we did not think about, assumed were impossible, or unintentionally left out of the simulation environment”

· Regarding, formal verification “Virtually all accidents involving software stem from unsafe requirements, not implementation errors… When I look at accidents where it is claimed the implemented software logic has led to the loss, I always find the software logic flaws stem from a lack of adequate requirements”

· “Formal verification (or even formal validation) can show only the consistency of two formal models. Complete discrete mathematical models do not exist of complex physical systems”

· “Software allows us to build systems of such high interactive complexity that it is difficult, and sometimes impossible, to plan, understand, anticipate, and guard against potential unintended interactions”

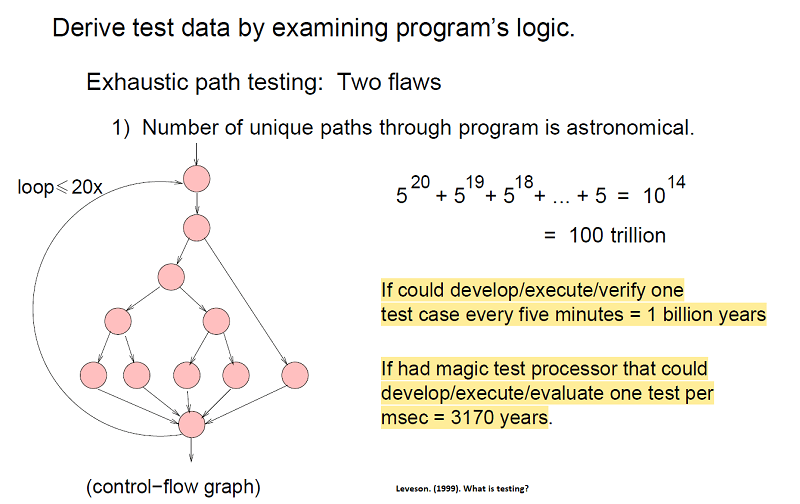

· As an example, it could take theoretically thousands or billions of years to thoroughly test software logics for all permutations and failure modes

· For instance, “TCAS—an aircraft collision avoidance system—was estimated to have 10^40 possible states”

NB. I’m not saying this is the case with CrowdStrike – just general post on modern, complex hazardous technical systems.

Sources:

1. Leveson (1999). What is testing?

2. Leveson (2020). Are You Sure Your Software Will Not Kill Anyone?

3. Leveson (2002). New Approach To System Safety Engineering

Links:

2. https://dspace.mit.edu/bitstream/handle/1721.1/71860/16-358j-spring-2005/contents/readings/book2.pdf

3. https://cacm.acm.org/opinion/are-you-sure-your-software-will-not-kill-anyone/

4. My site with more reviews: https://safety177496371.wordpress.com