How can we make injury metrics suck less?

So this came out of a conversation with somebody (thanks Jordan Vince).

I’m **not** a promoter of injury measures. I think we spend FAR too much time quibbling over what are, statistically speaking, quite rare events, when we have the entire spectrum of daily work to learn from.

There’s also more interesting things to focus on, like hazardous and complex work, critical controls etc.

But, whatever. Injury measures can be and are still useful, with serious caveats.

I like Matthew Hallowell and team’s suggestions on injury measures. If they must be used, then at least try to improve their statistical basis.

They suggest:

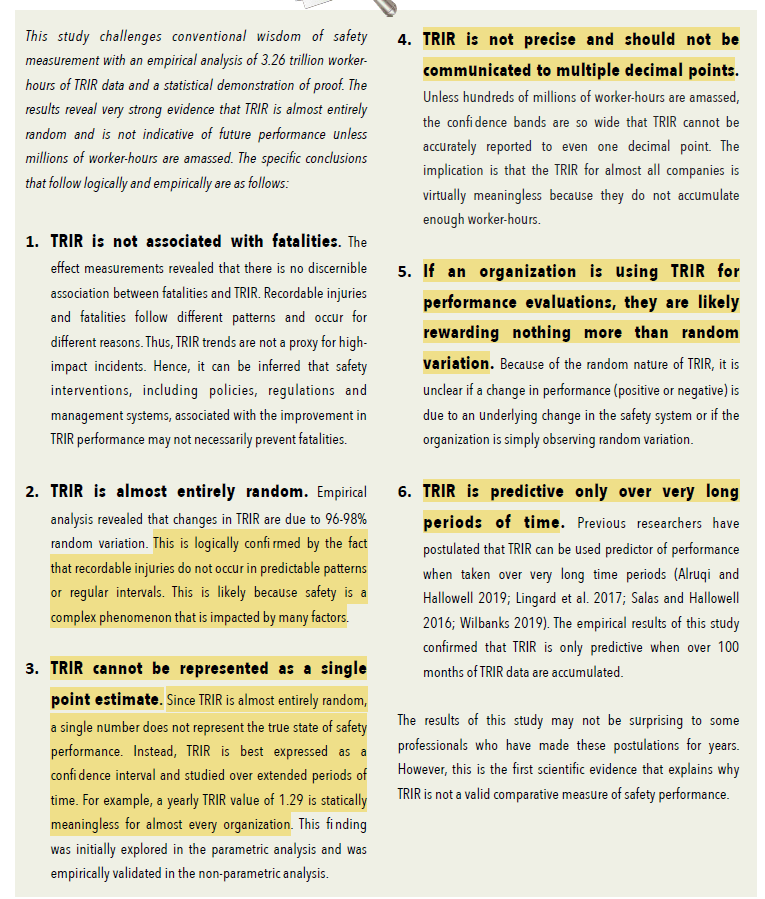

· Use a range instead of single point estimate

· Carve-off the decimals (1 instead of 1.1)

· Decouple the measures from decision-making (e.g. they say “If an organization is using TRIR for performance evaluations, they are likely rewarding nothing more than random variation”)

· Increase the sample size and hence, statistical power – time etc.

· Not used to track internal performance, or at least between projects or teams (* See sample size and statistical power)

· By extension, maybe caution when used for gauging contractor ‘performance’ and use in tendering, also

I also like David Oswald‘s suggestion on coupling quantitative indicators with a qualitative indicator; one gives you the what, the other gives you the rich narrative on how (and why it matters).

There’s also plenty of other tools to improve the use of injury measures. Control charts are a favourite of mine (see image 2), and you can use specific charts like Poisson or negative binomial charts.

There’s also statistical methods like significance testing, confidence intervals and more.

What else?

Refs:

1) Hallowell, M., Quashne, M., Salas, R., MacLean, B., & Quinn, E. (2021). The statistical invalidity of TRIR as a measure of safety performance. Professional Safety, 66(04), 28-34. (images 1 & 3)

2) Jacob Anhøj, Anne-Marie Blok Hellesøe, BMJ Qual Saf 2017 (image 2)

Articles: