“There was a very real sense in which all three parties were simply going through the motions together of producing ‘paper safety’”

This is a nearly 600 page accident inquiry for the 2006 military aircraft accident – just a few extracts I found interesting regarding broader safety management.

It’s a fantastic read and a masterclass in the problems of safety management (paperwork logics, clutter, safety work, false safety etc.).

Not explaining the accident or any of the background and will use mostly quotes, as Haddon-Cave et al. describe it better than I can.

I’m also mixing and conflating the responsibilities levied towards certain stakeholders into the same sections, as it’s not so relevant to this post (more interesting are the broader/general learnings.)

General Background Comments

“The Nimrod Safety Case process was fatally undermined by a general malaise: a widespread assumption by those involved that the Nimrod was ‘safe anyway’ (because it had successfully flown for 30 years) and the task of drawing up the Safety Case became essentially a paperwork and ‘tickbox’ exercise”.

The inquiry notes the role of BAE Systems in the accident. For one, it’s said that the work was “poorly planned, poorly managed and poorly executed, work was rushed and corners were cut. The end product was seriously defective”.

40% of the aircraft hazards had been left ‘Open’ and 30% ‘Unclassified’. One of the hazards linked to the genesis of the catastrophic onboard fire was one of those left open and unclassified. At handover meetings, these open or unclassified hazards were coupled with sometimes “only vague recommendations that ‘further work’ was required”. Nor did they disclose the scale of the hazards to the customer.

In any case, it’s said that stakeholders “were lulled into a false sense of security”.

The Nimrod integrated project team also played a substantial role in the accident. The inquiry says that they delegated a project management to a relatively junior person “without adequate oversight or supervision”, had inadequate SME involvement, “failed to read the BAE System Reports carefully or otherwise check BAE Systems’ work”, didn’t follow their own SMS plan and more.

Also, the inquiry points to issues with the risk assessment process: “the Nimrod IPT sentenced the outstanding risks on a manifestly inadequate, flawed and unrealistic basis, and in doing so mis-categorised the catastrophic fire”. A major risk was sentenced as ‘Tolerable’ when “it plainly was not”.

Interestingly, the inquiry notes that the Nimrod project team “outsourced its thinking” (emphasis added).

Safety Case Failures

Lots covered on safety cases, so just a few extracts.

For the inquiry, the safety case “represented the best opportunity to capture the serious design flaws in the Nimrod … that had lain dormant for the decades before the accident to XV230”.

In their view, shaped by hindsight (which they recognise), a “careful Safety Case would, and should, have highlighted the catastrophic risks to the Nimrod fleet [and if it] had been properly carried out, the loss of XV230 would have been avoided”.

[** Personally, I don’t think these types of counterfactuals are as helpful as people think they are]

Nevertheless, the Nimrod safety case “was a lamentable job from start to finish. It was riddled with errors. It missed the key dangers. Its production is a story of incompetence, complacency and cynicism”.

Indeed, they further argue that the safety case “was essentially a ‘paperwork’ exercise. It was virtually worthless as a safety tool” (emphasis added).

One of the key issues with the approach of the safety case is the belief system at play: “all sides was fatally undermined that by the assumption that the Nimrod was safe anyway”.

For existing aircraft, they would undergo an ‘implicit safety case’, which negates the requirement for a full prospective safety case. Instead, the implicit safety case is a general assessment “to assure that there were no known reasons not to accept the current clearances and underlying safety evidence”.

That is, “the fact that an aircraft had been built to a standard applicable at its build date, had received the necessary clearances and operated satisfactorily since then could be taken as acceptable in lieu of an explicit Safety Case, provided that a general safety assessment did not reveal any evidence to the contrary”.

The inquiry criticises the notion of the implicit safety case. First, one either does or does not prepare a safety case (no such thing as implicit – it’s either explicit/completed, or not). An implicit safety case also doesn’t align with Lord Cullen’s concept of a thorough assessment because “the notion assumes that a legacy aircraft is safe merely because it was built to design and has been operating without mishap for a number of years”.

In contrast, systems must be assumed on a reverse burden of proof: “the activities are not presumed innocent until proven guilty”, e.g. the system is to be considered unsafe until the system is proven (argued/reasoned) to be safe. The safety case, according to Cullen, was more about the company “assuring itself that its operations were safe”, with convincing the regulator being a secondary concern.

However, the problem of assuring themselves was problematic since: “there was no real need for them to assure themselves of something that they assumed to be the case, i.e. that the Nimrod was safe. This attitude was unfortunate and served to undermine the integrity and rigour of the Safety Case process itself”.

Organisational & Safety Management Failures

They state:

“Organisational causes played a major part in the loss of XV230. Organisational causes adversely affected the ability of the Nimrod IPT to do its job, the oversight to which it was subject, and the culture within which it operated, during the crucial years when the Nimrod Safety Case was being prepared”.

I’ve skipped a lot here.

They say that “Accidents are indications of failure on the part of management and that, whilst individuals are responsible for their own actions, only managers have the authority to correct the attitude, resource and organisational deficiencies which commonly cause accidents”.

System safety critically relies on people being involved through the safety lifecycle. Hence, safety assessments “must not be viewed as a one-off exercise: people should be continuously trying to make things safer”

And, importantly, “The non-occurrence of system accidents or incidents is no guarantee of a safe system”.

The inquiry points to the huge changes that the Ministry of Defence (MOD) went through over the years, which impacted airworthiness arrangements.

Three core changes were: (quoting the inquiry)

· a shift from organisation along purely ‘functional’ to project-oriented lines

· the ‘rolling up’ of organisations to create larger and larger ‘purple’ and ‘throughlife’ management structures

· ‘outsourcing’ to industry

These changes were discussed in the context of financial / production conflicts. Like with Nimrod, there was a “conflict between ever-reducing resources and … increasing demands; whether they be operational, financial, legislative, or merely those symptomatic of keeping an old ac flying”.

Further, at the MOD there was additional “organisational trauma”, with financial pressures and cost cutting which led to a “a cascade of multifarious organisational changes, which led to a dilution of the airworthiness regime and culture within the MOD, and distraction from safety and airworthiness issues as the top priority”.

The MOD also shifted its approach towards more of a business with financial targets “at the expense of functional values such as safety and airworthiness”.

The inquiry says it’s during these crunch times that MORE resources are needed to ensure system safety rather than less.

Some accidents prior to the Nimrod event were said to be relevant for learning but weren’t heeded [** as if often the case in hindsight]. For the inquiry, these incidents “incidents represented missed opportunities to spot risks, patterns and potential problems, and for these lessons to be read across to other aircraft”.

For one, many of these prior incidents were “treated in isolation as ‘one-off’ incidents with little further thought being given to potential systemic issues, risks or implications once the particular problem on that aircraft was dealt with”. It was said to be rare that somebody would see how these findings applied across the broader profile.

There was also a “lack of corporate memory as to related incidents which had occurred in the past”. And, “No-one was taking a sufficient overall view”.

The inquiry again takes aim at the flawed risk assessment processes. I found this interesting about quantification in risk assessments:

“It was specifically stated that such quantification “must support, not replace, sound judgment” and that “probabilities must not be ‘tweaked’ to artificially move hazards or accidents into a more favourable category” (emphasis added).

When risk assessments or indications were “borderline”, it’s advised that the “more stringent criteria should always be applied to ensure appropriate management action is taken”

The inquiry also pointed the finger towards culture, noting that BAE Systems had “failed to implement an adequate or effective culture, committed to safety and ethical conduct. The responsibility for this must lie with the leadership of the Company”.

Paper Systems and False Safety

Although this wasn’t a separate section, the inquiry refers to the problems with ‘paper systems’, like with the SMS or safety case. I’ve got some examples below.

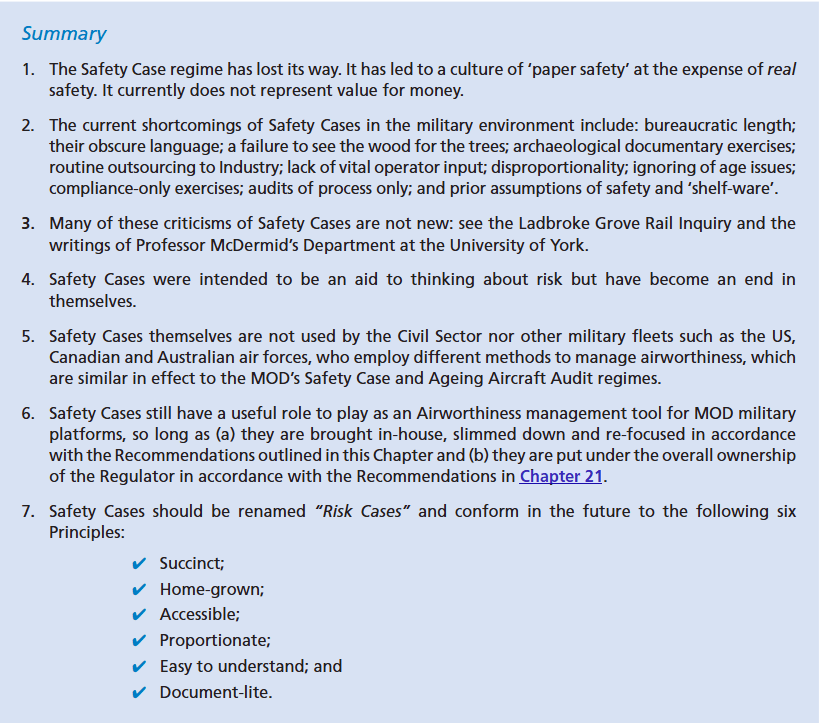

The inquiry argued that the “Safety Case regime has lost its way. It has led to a culture of ‘paper safety’ at the expense of real safety” (emphasis added).

For those producing the safety case, “There was a very real sense in which all three parties were simply going through the motions together of producing ‘paper safety’; two were getting paid and one was ticking a regulatory box which needed to be ticked”.

The safety case was intended to be a means to an end, to “provide a structure for critical analysis and thinking, or a framework to facilitate a thorough assessment and addressing of serious risks. However, the production of the safety case, the “production of a ‘Report’ became an end in itself”.

Instead of a structured process for critical analysis, “Critical analysis descended into a paperwork exercise. Compliance with regulations was the aim”, fatally undermined by the assumption that it was “safe anyway”.

Moreover, contracting out the safety case was also challenged. E.g. as per one expert, “there is also a danger in merely contracting for a Safety Case ‘report’, as opposed to a proper ‘risk analysis’. If one contracts for the former, then this is precisely what one is likely to get”.

Also, the safety case is said to be “pointless”, if it’s just about “making explicit what is (assumed to be) implicit, i.e. documenting the past”.

The process of their safety case was said to be an “archaeological exercise”. It was more focused on digging up existing/legacy paperwork to demonstrate that the aircraft complied with the original standards etc. rather than whether it is safe now.

The inquiry noted “I fail to see that merely demonstrating that the aircraft was designed and manufactured in compliance with certification standards 30 years ago allows any conclusion to be drawn as to the current safety of the aircraft”.

Further, “even though a trawl through the records may have the attraction of being superficially comforting, as well as relatively undemanding work, it is likely to prove time-consuming and un-rewarding”.

BAE Systems was said to have spent an “inordinate amount of time conducting documentary archaeology, i.e. trawling through historical design data, but later skimped on the crucial hazard analysis”.

Evidence for ‘safety’ rather than ‘unsafety’

Just a few extracts here I found interesting. It’s argued that “the task or aim he was given was to look for evidence that would demonstrate the risk was “Improbable”.

Therefore, “Herein lay the seeds of the problem: the task was not to find out what the real risks were so much as to document that risks were “Improbable”, i.e. safe”

‘Blurb’ and the ‘thud factor’

I also really liked this section on the blurb or thud factor. Quoting the report “In my view, much of the documentation produced by BAE Systems … contained a great deal of what might politely be described as ‘padding’ or “blurb”. Also known as thud.

It’s argued that like many consultants’ reports, there is a lot of padding to “give the client the impression that he was getting a substantial piece of analysis”

Clients are said to be more impressed when the report is weighty and substantive, and they feel like they are getting value for money.

The BAE Systems reports “contained much unnecessary repetition”. I’ve skipped the examples, but it’s said that much of the repetition adds “little value but length to the latter and making them less digestible”.

Report link: https://assets.publishing.service.gov.uk/media/5a7c652640f0b62aff6c1609/1025.pdf

LinkedIn post: https://www.linkedin.com/pulse/nimrod-accident-inquiry-exploration-paper-safety-false-ben-hutchinson-0kbjc