Leveson & Cutcher-Gershenfeld discuss systems safety in the context of the Columbia Accident Investigation Board (CAIB) investigation.

NB. These types of analyses are, of course, replete with hindsight and outcome logics, and sometimes judgmental attributions (failure, inadequate). But that doesn’t inherently mean we can’t learn anything.

Extracts:

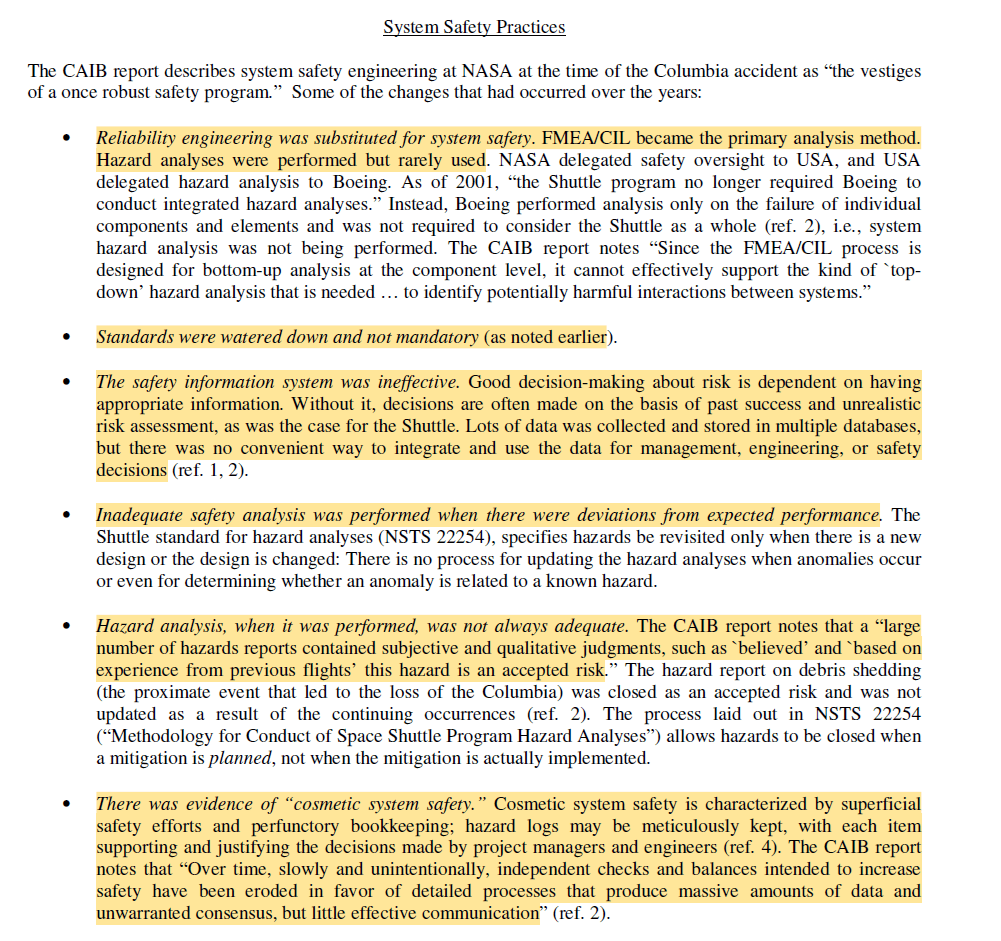

· “The CAIB report describes system safety engineering at NASA at the time of the Columbia accident as “the vestiges of a once robust safety program

· “The safety information system was ineffective. Good decision-making about risk is dependent on having appropriate information. Without it, decisions are often made on the basis of past success and unrealistic risk assessment, as was the case for the Shuttle”

· ”Lots of data was collected and stored in multiple databases, but there was no convenient way to integrate and use the data”

· a “large number of hazards reports contained subjective and qualitative judgments, such as `believed’ and `based on experience from previous flights’ this hazard is an accepted risk”

· “The hazard report on debris shedding (the proximate event that led to the loss of the Columbia) was closed as an accepted risk and was not updated as a result of the continuing occurrences”

· “There was evidence of “cosmetic system safety”

· “Cosmetic system safety is characterized by superficial safety efforts and perfunctory bookkeeping; hazard logs may be meticulously kept, with each item supporting and justifying the decisions made by project managers and engineers”

· “Over time, slowly and unintentionally, independent checks and balances intended to increase safety have been eroded in favor of detailed processes that produce massive amounts of data and unwarranted consensus, but little effective communication”

· They discuss the migration towards accident (drift), where “By the eve of the Columbia accident, inadequate concern over deviations from expected performance, a silent safety program, and schedule pressure had returned to NASA”

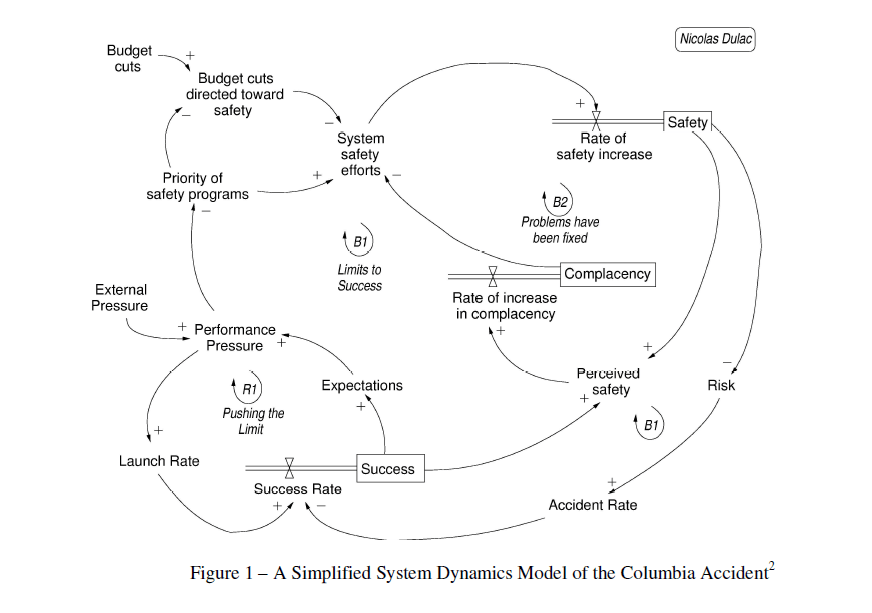

· They provide a simplified systems dynamic model of the Columbia accident; as external pressure increased, so did performance pressure, which then “led to increased launch rates and thus success in meeting the launch rate expectations, which in turn led to increased expectations and increasing performance pressures”

· This, “of course, is an unstable system and cannot be maintained indefinitely”

· “The external influences of budget cuts and increasing performance pressures reduced the priority of system safety procedures and led to a decrease in system safety efforts”

· “One thing not shown in the figure is that these models also can contain delays. While reduction in safety efforts and lower prioritization of safety concerns may lead to accidents, accidents usually do not occur for a while so false confidence is created that the reductions are having no impact on safety”

Study link: http://sunnyday.mit.edu/papers/issc04-final.pdf

I always check whether the paper is new for me or that I have already read it. The date of the paper (or its full reference) helps me, and you usually add that under the review. Not this time, and it turns out the original paper is undated. Luckily Google Scholar helped me out: 2004 – her classic but nothing new. But thanks for highlighting this seminal piece again.

LikeLike