#AI: Structural vs Algorithmic Hallucinations

There’s several typologies that have sorted different types of hallucinations – this is just one I recently saw.

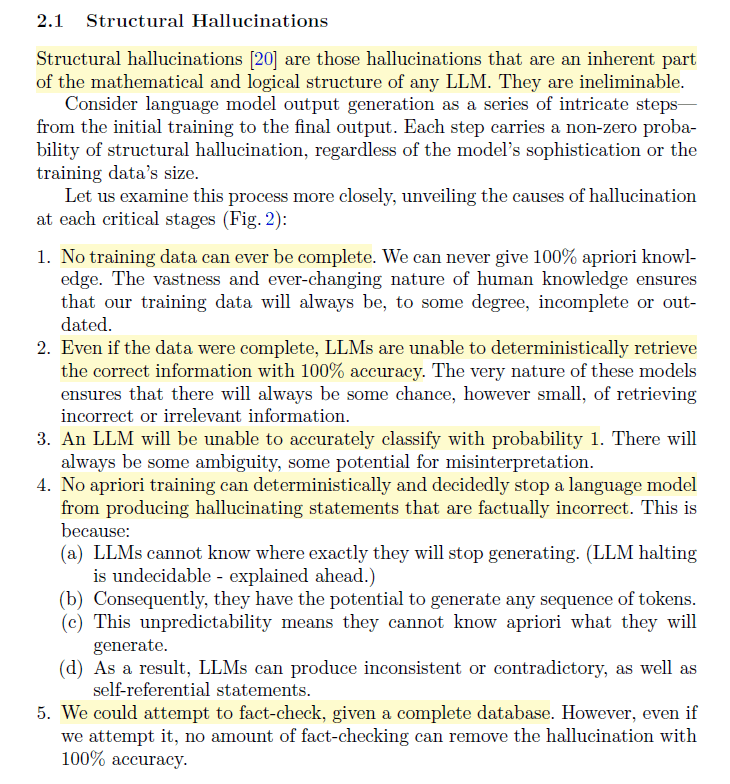

This suggests that structural hallucinations are an inherent part of the mathematical and logical structure of the #LLM, and not a glitch or bad prompt. LLMs are probabilistic engines, with no understanding of ‘truth’**.

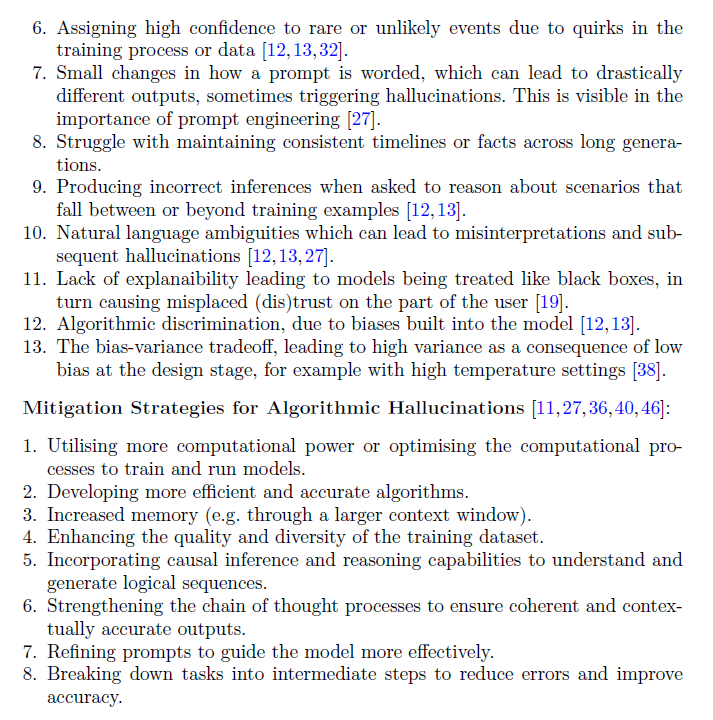

Algorithmic hallucinations relate to issues with the training data (its use or accuracy), optimisation techniques, inference algorithms and more.

They’re said to be a reducible component of hallucinations, via computational resources, algorithms, model architecture and data.

Reminds me a little of aleatory uncertainty (the inherent, random variability that can’t be resolved simply by adding more info) and epistemic uncertainty (limits of our knowledge).

(** Others have argued that LLMs could be considered to have some understanding of ‘truth’ because of human reinforcement learning)

Source: Banerjee, S., Agarwal, A., & Singla, S. (2025, August). LLMs will always hallucinate, and we need to live with this. In Intelligent Systems Conference (pp. 624-648). Cham: Springer Nature Switzerland.

PS. Check out my new YouTube channel: https://www.youtube.com/@Safe_As_Pod