Really interesting book chapter about the vocabulary of safety at NASA prior to the 2003 Columbia shuttle disaster.

WAY too much to cover, so a few extracts.

The vocabulary of safety means “the interrelated set of words used to guide organizational communications regarding known and unknown risks and danger to the mission, vehicle, and crew of the space shuttle program”.

This chapter is a bit critical of the CAIB’s conclusion of a “broken safety culture” at NASA, arguing that, in part, the language of safety wasn’t properly calibrated to uncertainty and ambiguity and hence didn’t allow frank and critical discussions, and calibrated perspectives of risk.

Quoting the paper, “NASA engineers and managers were aware of the potential risks of foam debris on the shuttle’s thermal protection system (TPS), but considered the likelihood of danger as remote, and an acceptable risk. While vocabularies are but one aspect of an organization’s culture, they are a critical part”.

Language is important since “values and assumptions of an organization’s culture are expressed through its language”, and reveals insights into “what organizations think”.

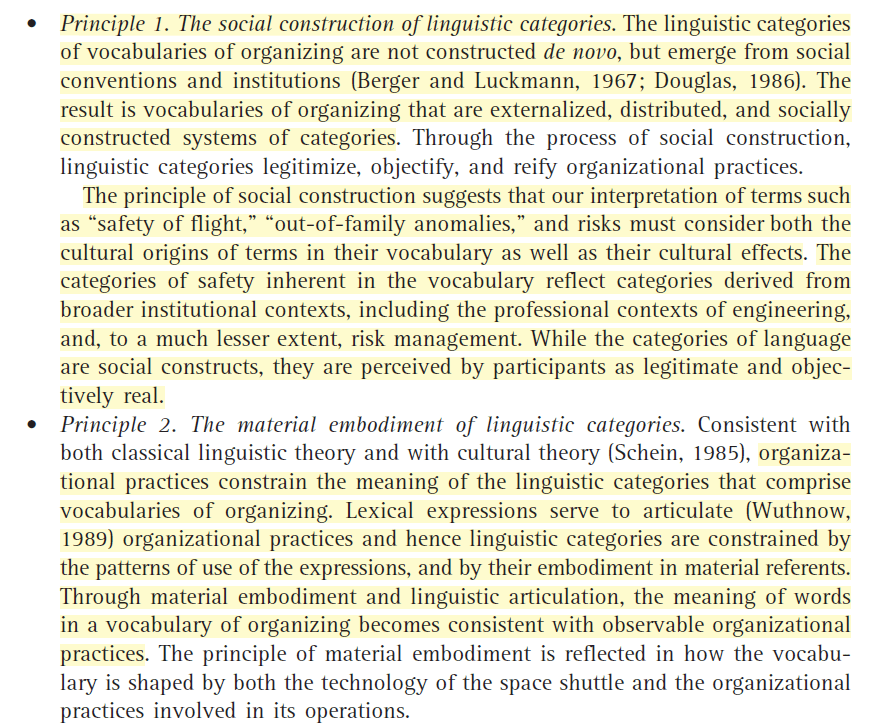

It’s said that linguistic categories that make up vocabularies emerge from social conventions and institutions. Through this social construction, “linguistic categories legitimize, objectify, and reify organizational practices”.

The author discusses the vocabularies of risk at NASA. Too lazy to write it out, so here’s a picture:

While categories of language are socially constructed, they are “perceived by participants as legitimate and objectively real”. It’s said that “the meaning of words in a vocabulary of organizing becomes consistent with observable organizational practices”, and itself also shapes practices.

That is, “The vocabulary of safety, while socially constructed, must articulate with the material reality of the shuttle, its technology, and the resources and routines utilized in the space shuttle program”. Hence, while vocabulary shapes how “participants understand, classify, and react to foam debris, the foam debris itself exists independently of the vocabulary”.

Put simply, while the laws of physics and economic principles exist independently of language, it’s language that allows people to understand and express these facets.

Faster Better Cheaper

A core vocabulary across NASA was faster better cheaper (FBC). I’ve skipped a lot in this section, but it was said to be best understood as a management style, rather than a “highly institutionalized culture and vocabulary”.

Critically, one manager remarked that “We’ve never been able to define what better is in any meaningful way”. Others argued that FBC “focused agency attention away from flight safety considerations”.

Throughout NASA activities, it’s said that there were “no explicit attempts … made to make better links between the vocabulary and improved safety”. Notably, the importance of pursuing FBC was noted while “viewing safety as a separate category of desiderata, a constraint to be observed rather than a goal to be pursued:”.

Because space flight is inherently risky, and therefore unavoidable to a degree, “making space flight safer was not a top priority”, instead ”Safety first for NASA is viewed operationally, as taking steps to avoid and prevent known safety problems while acknowledging the high risks of space flight”. In all, it’s argued that there was “limited focus on investing in safety improvements”.

Finding 1. The official language of NASA headquarters, exemplified by the philosophy of “faster, better, cheaper,” viewed safety primarily as a constraint not to be compromised rather than as a goal to be achieved and improved upon.

Next the author dives into specific language in key safety meetings. It’s noted that issues were couched in their own special vocabulary, like “problems, issues, constraints, assessments, waivers, anomalies, open, and closed”. Issues were or weren’t issues unless they were couched in this language.

A number of terms were also used to capture safety issues, like: no risk to safety of flight, adequate factor of safety, adequate thermal protection, margin of safety unaffected, no increased risks, safe to fly, etc. However, “no risk to safety of flight” doesn’t mean no or little risk, but rather an acceptable risk – and remember the earlier relegation that space flight is inherently risky and some things just have to be accepted.

Finding 2. The vocabulary of organizing views safety as a bureaucratic constraint within NASA’s space shuttle program, embodied in formalized organizational practices, primarily, its Flight Readiness Review, Mission Management Team, and Failure Mode and Effects Analysis systems.

Finding 3. Safety classifications were based on engineering analysis and judgment, mediated by experience.

Next it’s said that NASA’s vocabulary of safety didn’t accommodate uncertainty and ambiguity, despite the space program holding a substantial degree of uncertainty around the causes behind foam losses.

On this, given that the vocabularies and technical classification schemes provide a set of concepts for analysing and communicating problems, “Anything that is easily described and discussed in terms of these concepts can be communicated readily in the organization; anything that does not fit the system of concepts is communicated only with difficulty”.

Therefore, what the organisation and its members focus on and discuss tends to be shaped by the existing vocabularies. What don’t fit into the vocabularies tend to be discounted, converted or ignored.

Finding 4. Safety classifications absorbed uncertainty, particularly at the level of the Mission Management Team.

The author discusses Vaughan’s research of the Challenger disaster. For NASA, key terms included acceptable risk, anomaly, C1, C1R, catastrophic, discrepancy, hazard, launch constraints, loss of mission and more.

For Vaughan, this language at NASA was “by nature technical, impersonal, and bureaucratic”. And while this vocabulary was effective for defining and focusing on the most risky shuttle components, “they became ineffective as indicators of serious problems because so many problems fell into each category”.

Terms evolved in their meanings, too. Like the meaning of “no safety of flight issue”, which were issues “that were deemed potentially safety of flight issues but had become accepted risks for the agency”. E.g. they were not “no flight of safety issues”, but rather an acceptable/tolerable risk.

Problematically though, NASA was tracking a large number of potential / accepted risks as part of operating the shuttle. Thousands of waivers and anomalies, just part of doing space flight business. Therefore since space flight is inherently risky, NASA had to come to accept a lot of risks as part of doing business, so the distinction became blurred between acceptable risk, low risk and not a safety of flight issue.

Hence, this type of technology by necessity requires so many approved deviations from design, necessitating potential safety of flight issues into becoming acceptable risks, “not because the risk has been removed, but because the risk is considered remote and need not be explicitly revisited”.

Finding 5. Post-Challenger, the term “safety of flight” became an institutionalized expression within NASA’s organizational culture, but this expression was subject to semantic ambiguity with two separate meanings: (1) free from danger to mission, vehicle, and crew; and (2) an acceptable risk.

Finding 6. The vocabulary of safety was closely articulated with the material environment of NASA’s space shuttle program, and shaped by the technology, financial constraints, and the political-economic coalitions supporting continuation of the shuttle program.

Finding 7. While the term risk was ubiquitous, its meaning and its relationship to safety were highly ambiguous and situated within the multiple intersecting subcultures of NASA’s space shuttle program.

Finding 8. NASA’s focus on safety of flight criteria in its vocabulary allowed NASA’s managers and engineers to perceive the post-Challenger culture as a safety culture, despite the inherent risks in the space shuttle technology.

Next they discuss how people prefer to construct “plausible, linear stories of how failure came about once we know the outcome”. But for NASA scientists and engineers prior to the Columbia disaster, they didn’t know or understand the extent that the foam debris could lead to significant flight safety risk to the RCC panels, and hence to the thermal system.

This contrasts with Challenger, where O-ring problems “led to worsening signals of potential danger”, whereas for Columbia the “foam losses provided increasing, albeit, in hindsight, misleading, signals of the relatively low levels of risk associated with foam losses”.

Finding 9. Based on a combination of flight experience and engineering analysis, NASA’s space shuttle program learned that foam debris was an “acceptable risk,” a “turnaround” or maintenance issue, and not a “safety of flight” issue.

Finding 10. The vocabulary of safety normalized deviance, as defined by Vaughan, but without such normalization the space shuttle would not have flown post-Challenger.

Drawing on March’s 1976 work, it’s argued that “not all organizational learning is based on correct knowledge or understanding”. Organisations instead often learn from incomplete and partial knowledge, which is then, in theory, superseded. Similarly, NASA’s learning about the effects of foam debris on the RCC panels progressed the same way. Their misplaced learning was “due to both bounded rationality and incomplete knowledge, which are endemic to organizations, independently of their specific culture”.

The author then discusses the shuttle disaster briefly in the context of Perrow’s work, being the shuttles were made up of high interactive complexity – resulting from interacting foam ramp systems, insulation, RCC panel and more. But, it wasn’t tightly coupled – rather these systems were loosely coupled.

While tight coupling occurs more air time during disasters, loose coupling is even more challenging to address because of the lag between cause and effect; hence, more difficult to anticipate problems.

It’s further argued that the Mission Management Team, who’s role was guaranteeing safety of the shuttle and crew, “did not explicitly consider risk or uncertainty”. Moreover, the deterministic assessment of foam damage resulted in a categorisation of either safety of flight or not, there was “no consideration of the risks or uncertainties inherent in the decision”.

[** Some separate risk and uncertainty, whereas others combine them as risk involving uncertainty. Knight proposed that risk is something that could be knowable and estimated, whereas uncertainty is something we can’t estimate. Aven proposed a definition of risk that includes an assessment of the degree of knowledge and confidence, which incorporates uncertainty about our knowledge.]

Finding 13. The vocabulary of the STS-107 Mission Management Team was not hospitable to discussions of risks and uncertainty, and this vocabulary may have been a contributing factor in the failure to discuss imaging requests during the meeting.

CONCLUSIONS

In all, it’s argued:

· At NASA, “I find a vocabulary and a culture that views safety as a constraint to be observed rather than a goal to be improved”

· “I further find a culture where the meaning of safety of flight is ambiguous, meaning both “free from known danger” and “flying under conditions of accepted risk”

· “This vocabulary and this culture treat risks qualitatively rather than quantitatively, thereby making accepted risks opaque to most participants in their daily phenomenology of shuttle operations, not something to be attended to”

· Further, on the issue of known risks of RCC panels and foam debris: “only in hindsight can we attribute this failure of imagination to the vocabulary or to the culture”

· And “The methodology of risk assessment at NASA was not up to the task of identifying an undocumented risk not subject to previous experience. I would attribute this failure to the inherent complexity of the technology, rather than to the vocabulary or the culture”

Ref: Ocasio, W. (2005). The opacity of risk: Language and the culture of safety in NASA’s space shuttle program. Organization at the limit: Lessons from the Columbia disaster, 101-121.