I think this is one of the better uses of LLMs regarding investigations – they trained their model to evaluate accident reports and extract key details from the reports.

They found:

· It could extract key information from unstructured data and “significantly reduce the manual effort involved in accident investigation report analysis and enhance the overall efficiency”

· “the chatbot supports multi-turn conversations, allowing users to engage in deeper discussions about specific accident details and obtain more detailed results through continuous”

· “it lowers the professional threshold for accident analysis, allowing more subway construction industry practitioners to analyze accident investigation reports clearly”

Instead of trying to use it to replace people, or as a significant element of investigations, it was put towards the menial tasks of structuring and extracting insights, and I suppose pre-populating text fields in the accident reporting system.

I like this area of machine learning where it can free up cognitive bandwidth so people can focus on the more interesting and important things (learning and improvement), field work etc.

So much additional value from these models, of course, like with sentiment and valence analysis, climatic factors, assumptions, biases, judgemental language and more, and also put towards prospective learning.

Nor, necessarily about trying to create comprehensive shopping lists of causal or contributing factors, but freeing up time from less-productive tasks and for finding long-term trends and blindspots in our thinking and assumptions.

[** This isn’t an endorsement of their AIR model – these things are a dime-a-dozen.]

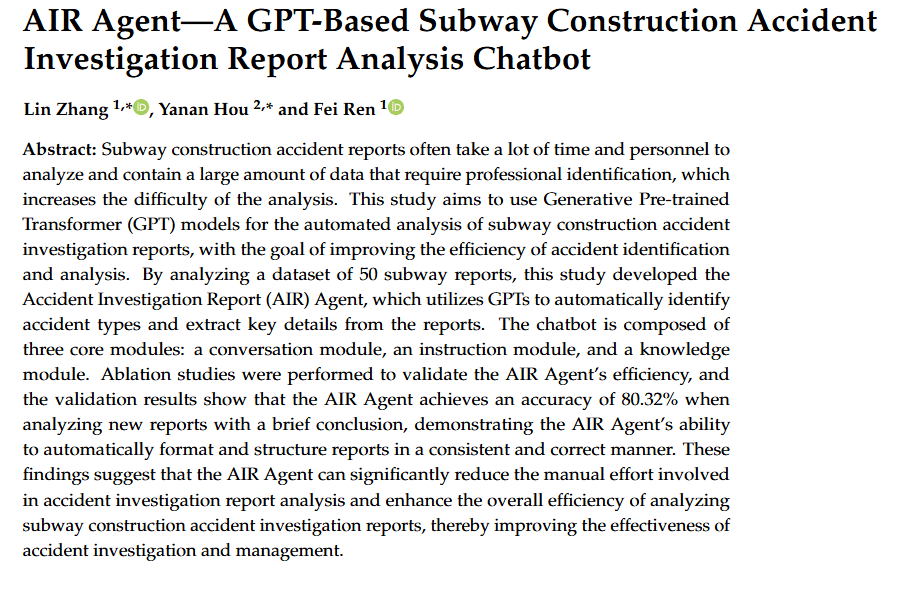

Ref: Zhang, L., Hou, Y., & Ren, F. (2025). AIR Agent—A GPT-Based Subway Construction Accident Investigation Report Analysis Chatbot. Buildings, 15(4), 527.

Study link: https://doi.org/10.3390/buildings15040527