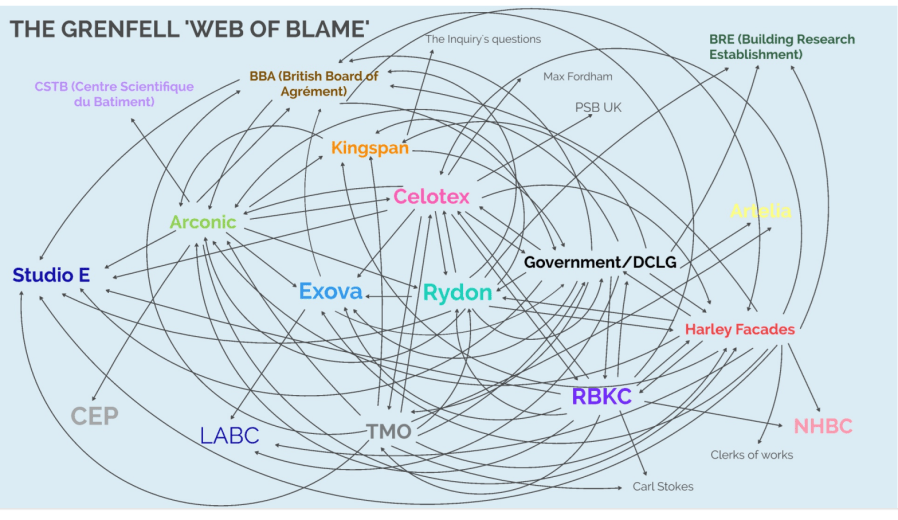

The Grenfell ‘web of blame’ as presented by Counsel (Millett, KC) to the Inquiry’s closing submissions. Not much to say about this, as I’m posting a study tomorrow which applied network analysis to this web of blame. But it’s noted that actors distributed throughout across multiple societal levels contributed to spinning a web of blame.… Continue reading Grenfell’s ‘web of blame’: accusations, defences, and counter-accusations

Year: 2025

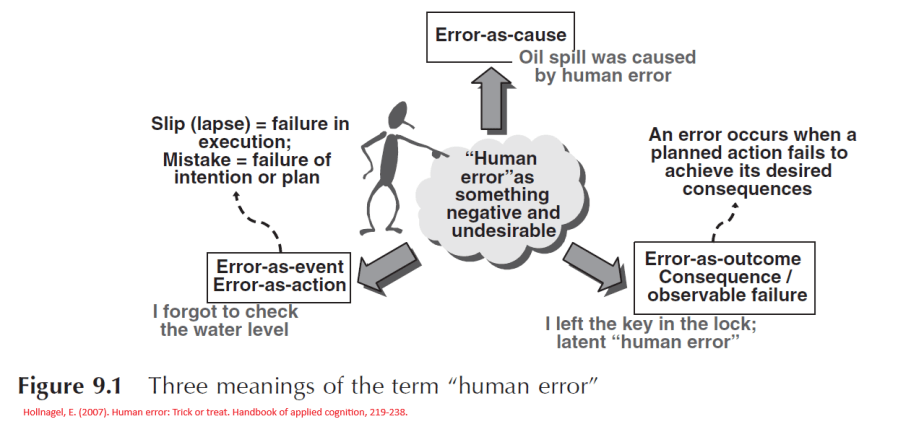

Safe As 62: Types of error – error as cause, event, or harm

What do we mean when we say ‘human error’? Do you mean as a cause, event, or harm? And does error language more broadly mask more underpinning human variability, and increase the risk of blame? Sources: 1. Hollnagel, E. (2007). Human error: Trick or treat. Handbook of applied cognition, 219-238. 2. Read, G. J., Shorrock, S.,… Continue reading Safe As 62: Types of error – error as cause, event, or harm

SafeWork prosecution and the legal definition of risk, reasonably practicable, and what ought reasonably to have been known

This prosecution judgement related to a serious injury, after heavy stone slabs tipped off a truck tray onto a worker. Some extracts I found interesting: · “state of knowledge applied to the definition of practicable is objective. It is that possessed by persons generally who are engaged in the relevant field of activity and not the… Continue reading SafeWork prosecution and the legal definition of risk, reasonably practicable, and what ought reasonably to have been known

Definitions of risk and risk as a product of the strength of knowledge

While on the topic of risk (see the post from yesterday – link below), the first two images have a group of definitions. Images 2/3 below highlight one perspective from Prof Terje Aven. And, without excluding other definitions, here they argue that risk can include: “identified events and consequences, assigned probabilities, uncertainty intervals, strength of… Continue reading Definitions of risk and risk as a product of the strength of knowledge

Risk as a social construction and notes on quantification – from Prof Terje Aven

Really interesting pod with Prof Terje Aven discussing risk science. Some extracts: · “there is often a difference between experts’ risk judgments and people’s risk perception. But this difference can be explained also by the fact that people’s judgments could incorporate aspects of uncertainty not covered by the experts’ risk perspectives” · “Experts often restrict their assessment… Continue reading Risk as a social construction and notes on quantification – from Prof Terje Aven

Safe As 61: Another deep dive on human performance in barrier systems

How should we plan, design and integrate human performance into our risk control and barrier systems? This episode draws on four sources: Spotify: https://open.spotify.com/episode/4ML522ibTw8Uso8NzSkCP6?si=KLOq-dafQbqj6kggn1l1Nw Shout me a coffee (one-off or monthly recurring)

95% of accidents due to human error is implausible – more likely a design problem: Don Norman

Apt reflections from Don Norman about error. While he could believe that 1 to 5% of accidents may primarily result from human error, a claim of 75-95% is implausible. If the percentage is so high, then “clearly other factors must be involved. When something happens this frequently, there must be another underlying factor”. Norman remarks… Continue reading 95% of accidents due to human error is implausible – more likely a design problem: Don Norman

Failures of critical controls, risk normalisation, and weak governance: Callide investigation report findings

Extracts from the Callide investigation, involving a significant boiler pressure event. Thanks to @wade n for flagging this report. Not going into the event details – so check out the report. Extracts: · “The incident reflects a systemic failure to manage both technical and organisational risks, highlighting the critical need for integrated system reviews, clearly defined… Continue reading Failures of critical controls, risk normalisation, and weak governance: Callide investigation report findings

Safe As E60: Psychology of risk and motivating less risky decisions

How can we motivate–or even design for–more desirable, safer decisions on health, safety and life, and disincentivise riskier decisions? This episodes explores Gerald Wilde’s four tactics of motivating safer behaviour, from his book ‘Target Risk 3’. Spotify: https://open.spotify.com/episode/40zBw6nzY04Fzvp77zWcnB?si=zDd6ZxY4SqyhRlWJ2oyzZw Shout me a coffee (one-off or monthly recurring)

“We’ll know we have a problem, and we’ll fix it!” – Overestimating human response as a safeguard

This article discusses some of the mistakes or assumptions about human performance in complex environments. Extracts: · “Humans are not great at assessing risk. Engineers tend to be an optimistic lot, ready to solve any problem by relying on their technical skills and experience” · And while it’s “incredibly tempting, in the relative calm of process hazard… Continue reading “We’ll know we have a problem, and we’ll fix it!” – Overestimating human response as a safeguard