This paper discusses some of the good, bad and ugly of GenAI use in construction. GenAI “poised to fundamentally transform the Construction and Built Environment (CBE) industry” but also is a “dual-edged sword, offering immense benefits while simultaneously posing considerable difficulties and potential pitfalls” Not a summary – just a few extracts: The Good: · GenAI… Continue reading How generative AI reshapes construction and built environment: The good, the bad, and the ugly

Tag: chatgpt

Large language models powered system safety assessment: applying STPA and FRAM

An AI, STPA and FRAM walk into a bar…ok, that’s all I’ve got. This study used ChatGPT-4o and Gemini to apply STPA and FRAM to analyse: “liquid hydrogen (LH2) aircraft refuelling process, which is not a well- known process, that presents unique challenges in hazard identification”. One of several studies applying LLMs to safety… Continue reading Large language models powered system safety assessment: applying STPA and FRAM

How Much Content Do LLMs Generate That Induces Cognitive Bias in Users?

This study explored when and how Large Language Models (LLMs) expose the human user to biased content, and quantified the extent of biased information. E.g. they fed the LLMs prompts and asked it to summarise, and then compared how the LLMs changed the content, context, hallucinated, or changed the sentiment. Providing context: · LLMs “are… Continue reading How Much Content Do LLMs Generate That Induces Cognitive Bias in Users?

Cut the crap: a critical response to “ChatGPT is bullshit”

Here’s a critical response paper to yesterday’s “ChatGPT is bullshit” article from Hicks et al. Links to both articles below. Some core arguments: · Hick’s characterises LLMs as bullshitters, since LLMs “”cannot themselves be concerned with truth,” and thus “everything they produce is bullshit” · Hicks et al. rejects anthropomorphic terms such as hallucination or confabulation, since… Continue reading Cut the crap: a critical response to “ChatGPT is bullshit”

ChatGPT is bullshit

This paper challenges the label of AI hallucinations – arguing instead that these falsehoods better represent bullshit. That is, bullshit, in the Frankfurtian sense (‘On Bullshit’ published in 2005), the models are “in an important way indifferent to the truth of their outputs”. This isn’t BS in the sense of junk data or analysis, but… Continue reading ChatGPT is bullshit

ChatGPT in complex adaptive healthcare systems: embrace with caution

This discussion paper explored the introduction of AI systems into healthcare. It covers A LOT of ground, so just a few extracts. Extracts: · “This article advocates an ‘embrace with caution’ stance, calling for reflexive governance, heightened ethical oversight, and a nuanced appreciation of systemic complexity to harness generative AI’s benefits while preserving the integrity of… Continue reading ChatGPT in complex adaptive healthcare systems: embrace with caution

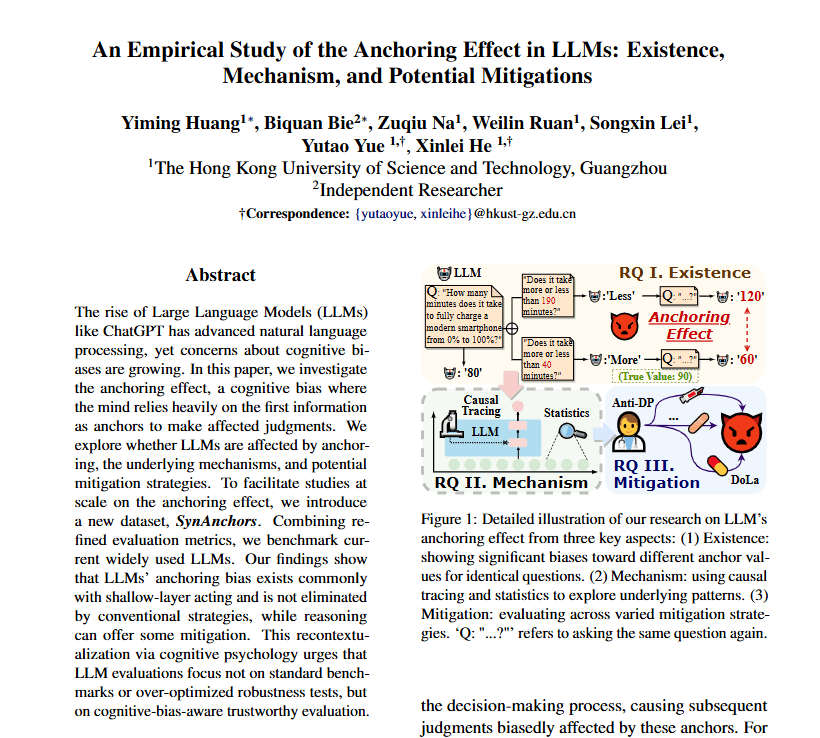

An Empirical Study of the Anchoring Effect in LLMs: Existence, Mechanism, and Potential Mitigations

This study found that several LLMs are fairly easily influenced by anchoring effects, consistent with human anchoring bias. Extracts: · “Although LLMs surpass humans in standard benchmarks, their psychological traits remain understudied despite their growing importance” · “The anchoring effect is a ubiquitous cognitive bias (Furnham and Boo, 2011) and influences decisions in many fields” · “Under uncertainty,… Continue reading An Empirical Study of the Anchoring Effect in LLMs: Existence, Mechanism, and Potential Mitigations

LLMs Are Not Reliable Human Proxies to Study Affordances in Data Visualizations

This was pretty interesting – it compared GPT-4o to people in extracting takeaways from visualised data. They were also interested in how well the LLM could simulate human respondents/responses. Note that the researchers are primarily interested in whether the GPT-4o model acts as a suitable proxy for human responses – they recognise there are other… Continue reading LLMs Are Not Reliable Human Proxies to Study Affordances in Data Visualizations

Harnessing the power of ChatGPT to promote Construction Hazard Prevention through Design (CHPtD)

This study compared whether ChatGPT can assist in hazard recognition during Construction Hazard Prevention Through Design (CHPtD) sessions (e.g. safety in design). Via randomised controlled experimental design, 162 civil and construction engineering students were tasked with hazard recognition activities with or without ChatGPT assistance. Providing background: Results: Ref: Uddin, S. J., Albert, A., & Tamanna,… Continue reading Harnessing the power of ChatGPT to promote Construction Hazard Prevention through Design (CHPtD)

Can ChatGPT exceed humans in construction project risk management?

This study pit ChatGPT 4 versus competent construction personnel (project/site managers, engineers etc.) in a task of project risk management. They specifically compared results between the AI model and people on a construction project case study: · Identify and list the potential project risks · Which risks are most critical and analyse them? · How are these risks… Continue reading Can ChatGPT exceed humans in construction project risk management?